Review Article, J Appl Bioinforma Comput Biol Vol: 12 Issue: 1

In Mental health Support, Ethical Perspective on the Utility of ChatBots

Khushi Tekriwal*

Department of Computer Science, Allegheny College George Mason University, Pennsylvania, United States

*Corresponding Author: Khushi Tekriwal

Department of Computer Science,

Allegheny College, George Mason University, Pittsburgh, Pennsylvania, United

States

Tel: 9874292223

E-mail: khushitekriwal05@gmail.com

Received date: 07 October, 2022, Manuscript No. JABCB-22-78307; Editor assigned date: 10 October, 2022, PreQC No. JABCB-22-78307 (PQ); Reviewed date: 25 October, 2022, QC No. JABCB-22-78307; Revised date: 03 January, 2023, Manuscript No. JABCB-22-78307 (R); Published date: 11 January, 2023, DOI: 10.4172/2329-9533.1000256

Citation: Tekriwal K (2023) In Mental Health Support, Ethical Perspective on the Utility of ChatBots. J Appl Bioinforma Comput Biol 12:1

Abstract

The use of chatbots is altering the dynamic between patients and medical staff. Conversational chatbots are computer programmes that use algorithms to mimic human conversation in a variety of ways, including through text, voice and body language. The user can type in a question and the chatbot will know exactly what to say in response. A major benefit of electronically delivered treatment and disease management programmes is the potential for improved adherence through the use of chatbot-based systems. This chapter presents a survey of current chatbot systems in the field of mental health. Some promising results have been shown for the use of chatbots in the areas of psychoeducation and adherence.

Keywords: Conversational User Interface (CUI); Evidence Based Medicine (EBM); Randomized Controlled Trials (RCTs); Depression; Anxiety

Introduction

What are chatbots?

According to the definition, a chatbot is "a system that communicates with users utilising natural language in a number of forms, including text, voice, images and video, including body expressions, facial, spoken and/or written" [1]. A chatbot may also be considered a chatterbot, virtual agent, dialogue system and Conversational User Interface (CUI) or machine conversation system. A chatbot is an artificial intelligence system designed to carry on conversations in our place. It's simple to begin interacting with a chatbot because most of them are text-driven and feature images and unified widgets [2].

The use of chatbots in the healthcare industry has grown rapidly in recent years. By providing behavioural change, therapy support, assistance in managing diseases (see, for example, Babylon health, which offers digital health consults and information (see, for example, Wysa, that offers cognitive behaviour therapy or healthcare chatbots help patients, their families and healthcare teams [3].

Literature Review

In recent years, the healthcare industry has seen a rise in the number of chatbots aimed at helping with mental health problems [4]. An estimated 29% of the population will experience mental health problems at a certain point in their lives with 10% of children and 25% of adults experiencing symptoms in a given year [5]. Disabling conditions resulting from mental health disorders are associated with lower quality of life indicators. Losses in productivity from both labour and capital are expected to total around $16 trillion worldwide between 2011 and 2030.

Most often, medication and/or talk therapy are used to treat mental health issues [6]. Although mental health services are in high demand, not enough trained professionals are available to meet that need. According to global estimates, there is one psychiatrist in developing countries for each ten million of the population, compared to in developed countries, nine per 100,000 people [7]. The world health organization reports that only about 15% of people in developing countries have accessibility to services regarding mental health, compared to 45% of people in developed countries [8]. If people with mental health issues aren't given treatment, they may become more suicidal and even take their own lives.

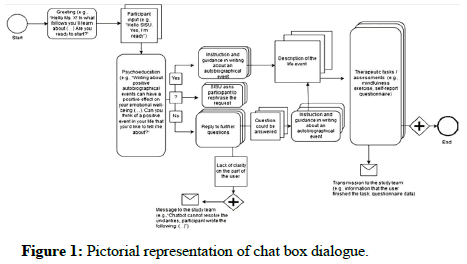

Over the past five years, conversational agents have seen increased interest in psychoeducation, behaviour change and self-help, all areas that aim to combat the problem of scarce resources for treating people with mental health disorders (Figure 1).

Chatbots for mental health

An analysis by Abd-alrazaq, found that 53 studies had reported 41 distinct chatbots for mental health. The Chatbots were created for a variety of functions, including screening (e.g., SimSensei), training (e.g., LISSA) and therapy (e.g., Woebot), with a focus on autism and depression. Seventy percent of all chatbots are deployed as standalone programmes, while only 30% use a web-based interface. 87% of the studies saw chatbots taking the reins and leading the conversation, while thirteen percent saw a shared leadership between the chatbot and human participants. Virtual humans or avatars are the most common forms of embodiment used by chatbots.

Two popular chatbot platforms being utilised today are SERMO and Wysa. Wysa, the chatbot, is both intelligent and empathetic [9]. It is integrating a mood tracker, which could detect negative moods. On the basis of the user's emotional state, it will either recommend a depression screening them to seek test or advise professional help. The app includes guided mindfulness meditation exercises to help with stress, depression and anxiety. A total of 129 people, split evenly between regular and infrequent chatbot users, participated in the study [10]. Research found that regular Wysa users experienced greater mood improvement than infrequent users (P>0.03). Participants' interactions with Wysa were found to be beneficial and stimulating.

The SERMO app is designed for people with moderate psychological impairment [11]. CBT techniques are used to help people learn to control their emotions. Based on Albert Ellis's ABC theory (situation, cognition, emotion), this app features a chatbot that prompts users to share how they're feeling about specific events in their lives. According to this theory, our thoughts and actions are influenced by the stimuli we take in, whether consciously or subconsciously [12]. Using the user's natural language and the data collected, SERMO can automatically determine the user's basic emotion. There are currently five recognised emotions: Joy, sadness, grief, anger and fear. The system recommends activities or mindfulness exercises as a suitable measure, depending on the emotion. Thirteen different conversations were created with OSCOVA for the prototype. Included are a wide range of user responses prompted by the user's expression of various emotions and states of mind. Natural language processing techniques and lexicon based processes are used to identify and categorise emotional states. Thus, SERMO is a chatbot that takes a hybrid approach, combining a rulebased conversation flow with NLU capabilities. In order to assess SERMO's usability, the user experience questionnaire was administered to a total of 21 participants (people with mental health conditions and psychologists) (UEQ). Users praised the app's usefulness, clarity and aesthetic appeal. Users gave generally neutral ratings on fun to use scales measuring hedonic quality. The experts who were consulted agreed that the app would be helpful for people who have trouble communicating in person.

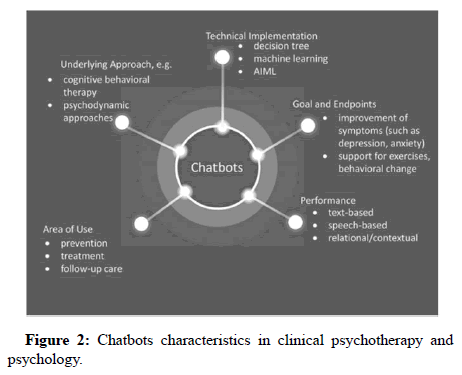

Since there is a severe lack of trained mental health professionals in both the developing and developed worlds, more and more people are turning to chatbots for assistance with their mental health. AI (Artificial Intelligence) algorithms are being added to chatbots more and more as a way for them to interact with users (Figure 2).

Advantages in mental health of chatbots

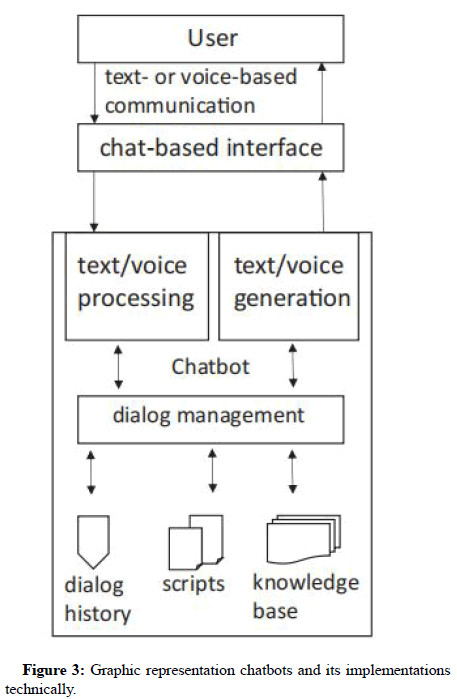

With mental health professional shortages worldwide, chatbots could help provide better care at a lower cost while also increasing access to care. For people who have trouble talking to their doctor about their mental health issues due to stigma, chatbots can be a helpful alternative for receiving treatment (Figure 3). As reported by Lucas and colleagues, veterans with PTSD were more forthcoming about their symptoms with a chatbot than with non-anonymized and anonymized versions of a self-administered questionnaire [13].

Because chatbots are typically intuitive and simple to use, they can be a great resource for people who may have less developed language, health literacy or computer skills. Simple verbal and nonverbal interactions, including empathy, concentration and proximity, are possible with embodied chatbots. They can then form a therapeutic bond with their patients as a result. According to research, even people who have never used a computer before can easily navigate chatbots [14].

Traditional computer based interventions for mental health have been shown to be effective, but they suffer from low adherence and high attrition rates because of the inefficiency of effective interaction with humans that can only be found in in-person meetings with healthcare professionals [15]. Chatbots could be a good alternative to these kinds of interventions because they talk to users in a way that is both natural and fun.

The potential advantages of using chatbots for mental health have been reported in previous studies. Chatbots, in particular, can help with a variety of mental health problems. Chatbots have been understood to be more effective than eBooks in reducing symptoms of anxiety (P=0.02) and depression (P=0.017) [16]. Chatbots can provide a safe space for people to learn and practise interpersonal skills (such as how to conduct a successful job interview) without fear of criticism. For example, the study found that those who used the chatbot improved their job interview skills and confidence more than those who waited on the waiting list (P=0.05) [17]. One or more mental health issues may be detectable by chatbots.

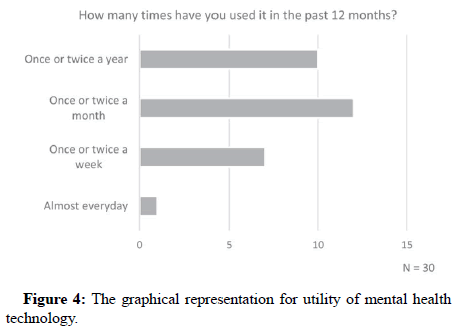

With mental health professional shortages worldwide, chatbots could help provide better care at a lower cost while also increasing access to care. For people who have trouble talking to their doctor about their mental health issues due to stigma, chatbots can be a helpful alternative for receiving treatment (Figure 4). As reported by Lucas and colleagues, veterans with PTSD were more forthcoming about their symptoms with a chatbot than with non-anonymized and anonymized versions of a self-administered questionnaire [18].

Ethical challenges

There are a lot of chatbots for mental health that can be found in app stores; however, many of them are not evidence based or at the very least, the knowledge that they are founded on is not contradicted by research that is relevant [19]. In order for mental health chatbots to be credible and useful, clinical evidence should be relied upon. This means that therapeutic procedures that are already utilised in clinical practise and have been demonstrated to be helpful should be incorporated into the chatbots. In addition, there is a paucity of research about the potential therapeutic use of chatbots in the field of mental health. It is difficult to draw definitive conclusions regarding the effect of chatbots on several mental health outcomes, according to a systematic review. This is because of the high risk of bias in the included studies, the low quality of the evidence, the lack of studies assessing each outcome, the small sample size in the included studies and the contradictions in the results of some of the included studies. Users could be harmed by improper recommendations or undetected dangers if such limitations are present.

Discussion

Chatbots are required to maintain users' privacy and confidentiality in order to protect the sensitive data they collect concerning users' mental health. Chatbots, in contrast to interactions between a patient and a doctor, in which the patient's right to privacy and confidentiality is respected, frequently do not take these factors into account. The vast majority of chatbots, particularly those that are offered on social media platforms, do not enable users to communicate in an anonymous capacity. As a result, communications can be associated with particular users. A number of chatbots have made it very clear in the terms and conditions section of their websites that they are free to abuse and share the data they collect for any number of reasons. However, users frequently accept such terms and conditions despite their complex and official language presentation without attentive reading and as a result, they may not be aware that the confidentiality of their data will not be maintained. This indicates that the data could be sold, traded or advertised by the distributor of a chatbot in some capacity. The Facebook issue serves as the clearest illustration of this, as it occurred when Facebook improperly shared the personal information of millions of its users with Cambridge analytica. It is possible that cyberattacks may become another problem, which would make users' personal health data available for uses that are not yet understood. The cumulative effect of these problems has an effect on the degree to which users are willing to interact with chatbots and the amount of sensitive information that they are willing to disclose.

Another difficulty associated with employing chatbots is ensuring users safety. At the moment, the majority of chatbots do not have the capability to handle critical scenarios in which the safety of their users is at stake. This may be due to the failure of chatbots to contextualise the talks of users, to understand the emotional cues that users provide and to recall the conversations that users have had in the past. Even though certain chatbots, like Wysa, provide the option of getting quick support from a mental health professional, such services are typically not free and people who are less than 18 years old are not permitted to use them. One more concern pertaining to security is an over dependence on chatbots. In other words, users of chatbots may get overly attracted to or overly dependent on them as a result of the ease with which they may access them; consequently, this may exacerbate the users' addictive behaviours and drive them to avoid face to face contacts with mental health specialists.

AI chatbots consistently work toward the goal of passing the turing test, which requires convincing users that they are having a conversation with a real person rather than a computer. Patients are fooled into thinking the chatbot is real by programming empathy into the chatbot and giving it responses that make it seem as though they are having a conversation with a real person. It's possible that mirroring exactly how therapists behave would make this impression even stronger. However, users have a right to know with whom they are talking and it would be immoral to mislead them in this manner from a healthcare provider's standpoint because of this right. It is possible that engaging in conversation with a computer or robot rather than a human being is considered demeaning in some societies. Kretzschmar and colleagues proposed that creators of chatbots warn users and continue to remind them, that they are talking with a computer that has limited capacity to grasp users requirements. This recommendation was made in order to prevent this ethical dilemma. The vast majority of chatbots also lack the capacity to demonstrate genuine empathy or sympathy, both of which are essential components of psychotherapy. As a result, the utilisation of such chatbots in mental healthcare may not be something that many individuals are comfortable. There is the potential for misuse of chatbot technology, including the use of the technologies to replace established services, which could potentially exacerbating already existing health inequalities. We lack ethical and regulatory frameworks for health interventions in general and mental health applications in particular. Recent research has attempted to solve the following issues: A group of patients, clinicians, researchers, insurance organisations, technology companies and programme officers from the US national institute of mental health came together to establish a consensus statement regarding criteria for mental health apps [20]. The group has come to a consensus on the following standards: The protection of users' data and privacy; efficiency; user experience and adherence and data integration.

Conclusion

Improvements to mental health chatbots linguistic abilities are still warranted. There needs to be an improvement in their capacity to comprehend user input and to appropriately respond to it. One of the biggest obstacles is developing a reliable method of identifying emergency situations and devising an appropriate response to those situations. Another unanswered question is how to tailor chatbots to specific users. It might be useful to learn from users feedback and conversations. One aspect of tailoring services to the user's specific needs is taking into account their level of health literacy. Language could be modified in terms of its style or complexity according to the information provided by the user. Treatment plans, for instance, could be tailored to a patient by retrieving relevant information from their medical history. There should be a way for a chatbot to get and use this kind of information on the fly.

Evaluating chatbots for mental health is important for making sure they are being used and are helpful. When assessing the technical difficulties of healthcare chatbots, what factors matter most? What metrics and standards should be used? Randomized Controlled Trials (RCTs), the gold standard of "level 1" evidence in Evidence Based Medicine (EBM), would be ideal for evaluating chatbots. Certain health outcome measures are established through these trials, which are carried out as summative evaluations of electronic interventions. More studies are needed to determine how those working in mental health care can benefit from implementing chatbots.

With mental health professional shortages worldwide, chatbots could help provide better care at a lower cost while also increasing access to care. For people who have trouble talking to their doctor about their mental health issues due to stigma, chatbots can be a helpful alternative for receiving treatment. Lucas and his colleagues found that veterans with PTSD were more honest about their symptoms when they talked to a chatbot than when they filled out a self-administered questionnaire, both with and without their names on it.

References

- Inkster B, Sarda S, Subramanian V (2018) An empathy-driven, conversational artificial intelligence agent (Wysa) for digital mental well-being: Real-world data evaluation mixed-methods study. JMIR Health 6:e12106.

[Crossref] [Google Scholar] [PubMed]

- Tschanz M, Dorner TL, Holm J, Denecke K (2018) Using eMMA to manage medication. Computer 51:18-25.

[Crossref] [Google Scholar] [PubMed]

- Steel Z, Marnane C, Iranpour C, Chey T, Jackson JW, et al. (2014) The global prevalence of common mental disorders: A systematic review and meta-analysis 1980-2013. Int J Epidemiol 43:476-493.

[Crossref] [Google Scholar] [PubMed]

- Whiteford HA, Ferrari AJ, Degenhardt L, Feigin V, Vos T (2015) The global burden of mental, neurological and substance use disorders: An analysis from the global burden of disease study 2010. PloS One 10:e0116820.

- Jones SP, Patel V, Saxena S, Radcliffe N, Ali Al-Marri S, et al. (2014) How google's ten things we know to be true' could guide the development of mental health mobile apps. Health Aff (Millwood) 33:1603-1611.

[Crossref] [Google Scholar] [PubMed]

- Cuijpers P, Sijbrandij M, Koole SL, Andersson G, Beekman AT, et al. (2013) The efficacy of psychotherapy and pharmacotherapy in treating depressive and anxiety disorders: A meta-analysis of direct comparisons. World psychiatry: Official J World Psychiatric Association (WPA) 12:137-148.

- Murray CJ, Vos T, Lozano R, Naghavi M, Flaxman AD, et al. (2012) Disability-Adjusted Life Years (DALYs) for 291 diseases and injuries in 21 regions, 1990-2010: A systematic analysis for the global burden of disease study 2010. Lancet (London, England) 380:22197-2223.

- Oladeji BD, Gureje O (2016) Brain drain: A challenge to global mental health. BJ Psych international 13:61-63.

[Crossref] [Google Scholar] [PubMed]

- Anthes E (2016) Mental health: There’s an app for that. Nature 532:20-23.

[Crossref] [Google Scholar] [PubMed]

- Hester RD (2017) Lack of access to mental health services contributing to the high suicide rates among veterans. Int J Ment Health Syst 11:47.

[Crossref] [Google Scholar] [PubMed]

- Abd-alrazaq AA, Alajlani M, Alalwan AA, Bewick BM, Gardner P, et al. (2019) An overview of the features of chatbots in mental health: A scoping review. Int J Med Inform 132:103978.

[Crossref] [Google Scholar] [PubMed]

- Lucas GM, Rizzo A, Gratch J, Scherer S, Stratou G, et al. (2017) Reporting mental health symptoms: Breaking down barriers to care with virtual human interviewers. Front Robot 4:51.

- Bickmore TW, Caruso L, Clough-Gorr K, Heeren T (2005) It's just like you talk to a friend’ relational agents for older adults. Interact Comput 17:711-735.

- Fitzpatrick KK, Darcy A, Vierhile M (2017) Delivering cognitive behavior therapy to young adults with symptoms of depression and anxiety using a fully automated conversational agent (woebot): A Randomized Controlled Trial. JMIR Ment Health 4:e19.

[Crossref] [Google Scholar] [PubMed]

- Fulmer R, Joerin A, Gentile B, Lakerink L, Rauws M (2018) Using psychological artificial intelligence (Tess) to relieve symptoms of depression and anxiety: Randomized controlled trial. JMIR Ment Health 5:e64.

[Crossref] [Google Scholar] [PubMed]

- Smith MJ, Ginger EJ, Wright M, Wright K, Humm LB, et al. (2014) Virtual reality job interview training for individuals with psychiatric disabilities. J Nerv Ment Dis 202:659-667.

[Crossref] [Google Scholar] [PubMed]

- Bendig E, Erb B, Schulze-Thuesing L, Baumeister H (2019) The next generation: Chatbots in clinical psychology and psychotherapy to foster mental health a scoping review. Verhaltenstherapie 1-13.

- Koulouri T, Macredie RD, Olakitan D (2022) Chatbots to support young adults’ mental health: An exploratory study of acceptability. ACM Trans Interact Intell Syst 12:1-39.

- Kretzschmar K, Tyroll H, Pavarini G, Manzini A, Singh I (2019) Can your phone be your therapist? Young people's ethical perspectives on the use of fully automated conversational agents (Chatbots) in mental health support. Biomed Inform Insights 11:1178222619829083.

[Crossref] [Google Scholar] [PubMed]

- Miller E, Polson D (2019) Apps, avatars and robots: The future of mental healthcare. Issues Ment Health Nurs 40:208-214.

[Crossref] [Google Scholar] [PubMed]

Spanish

Spanish  Chinese

Chinese  Russian

Russian  German

German  French

French  Japanese

Japanese  Portuguese

Portuguese  Hindi

Hindi